Software benchmarks for project predictability

You want to compare how you are doing against others, internally or against teams in other organisations. For comparisons between internal teams you can establish your own internal benchmarks, whilst for comparing against others in the software industry, you’ll be looking for industry software benchmarks. This article focusses on appropriate benchmarking of software work so that you can compare your teams’ performance against others.

Nb. This article is not about software performance (speed) benchmarking.

Software Benchmarking can help organisations understand, how well they are doing and where they might look to improve. Also with benchmarks, managers can answer the vital questions about a software project of “how long will it take?” and “how much will it cost?” Benchmarks can also be used to help make valuable business decisions about what software work to focus on.

Background

Software is becoming a key differentiator between successful and unsuccessful companies in most sectors. The ability to produce high quality innovative and differentiating software capabilities faster than competitors is one key to a company beating its competitors. Eventually it may become a leading factor in corporate survival, it already is for technology-based companies.

Knowing how your company is performing against others in its ability to deliver software capabilities is becoming increasingly more important. This is where benchmarking comes in.

Developer Productivity Benchmarking

The focus tends to be on developer productivity, rather for three reasons:

- Variable. Developer productivity varies significantly – most skilled being 100 times more productive than the least.

- Significant. Developer cost is usually the single biggest cost component of delivering of software.

- Valuable. Companies depend on delivering software to remain competitive, so the rate at which it can be delivered matters to an organisation.

The base measure of output is functional size, but don’t ignore quality.

The most useful base measure of output, is the amount of user-recognisable functionality that can be delivered to high quality by a developer or by a development team. The best way to determine this, is not by counting lines of code, but instead, the functional size of the software delivered. Functional size is measured using the ISO standard COSMIC Function Point (CFP). The CFP is the most modern and universal standard measure for software size. It is a technology independent measure of user-recognisable size. Developers and development teams’ productivity can be compared in average CFP per month or average CFP per sprint.

Developer Productivity Benchmarks

Each developer or team can be benchmarked in CFP per sprint.

Warning: Productivity metrics must require that quality is verified.

Developer ProductivityHours per CFP |

Low Competence |

Medium Competence |

High Competence |

|---|---|---|---|

| Package implementation | 6 | 2 | 1 |

| Low code | 8 | 3 | 2 |

| High level language (typical) | 25 | 8 | 4 |

| Highly regulated domain | 80 | 20 | 12 |

| Low level language / firmware | 80 | 20 | 12 |

These are values observed by the author across dozens of modest-sized projects (250-1500 CFP). You should establish and maintain your own benchmarks. They are often also expressed as the inverse, CFP delivered per unit of time. Eg. 20 CFP per month.

Rule of thumb: 10-20 CFP per developer per month is a general rule of thumb. Observed developer productivity range is 2 – 200 CFP per developer per month depending circumstances, domain, tools and competence.

Team Productivity Benchmarks

A general guide is that at team of seven, 4 developers, 2 testers and a business analyst, will on average produce at a rate of 4 hours per CFP ie. 80 CFP per 2-week sprint. This may be reduced if there is a carry-forward of bugs to be fixed from previous sprints.

Rule of thumb: 70 CFP per sprint is a reasonable first estimate, but you should establish your own benchmarks.

Most managers want to know how their team and company compare with others on:

- Software development productivity

- Software quality

- Software spend

- Software value for money

Internals software benchmarking compares different teams or projects within an organisation. External or industry software benchmarking compares these characteristics with external norms.

This article explores what software metrics can be benchmarked and what metrics to use so as not to stimulate unintended consequences.

The actual benchmark values presented are for guidance only and should not be considered definitive benchmarks. In all cases internal benchmarks are best, compiled from previous work within your organisation.

AI was not used to write this post.

Software Resourcing Benchmarks

For team planning, leaders need to know what is the ideal amount of work someone can handle. Software is knowledge work and developers cognitive constraints are such that they can only reasonably handle a certain amount of functionality. Our observations are that this around 150 CFP. Which means that regardless of the size of your project, you probably need more than one developer if your total scope is more than 180 CFP. The table below is a guideline for typical capacity by role.

Resourcing |

CFP per individual |

|---|---|

| Project Manager | 1000 |

| Business Analyst / Product owner | 400 |

| Tester | 300 |

| Developer | 180 |

For example, a 1000 CFP project will need 1 Project Manager, 2 Business Analysts, 3 testers and 5 developers. These numbers are dependent on the competency and domain knowledge of those involved. Wherever possible it is best to strive for smaller teams of highly competent individuals.

Note that for software maintenance work a single developer can usually handle more than 180 CFP. Usually it requires 1 full time developer per 1500-2500 CFP for maintenance.

Software Quality Benchmarks

Software quality is a matter of commercial advantage. The organisation that consistently delivers quality software that is useful to customers is likely to outperform its rivals. Benchmarking of software quality metrics therefore becomes an important corporate comparator.

Defect Potentials

The number of defects in a piece of software can be anticipated. The defects can be predicted based on the size of the software. It stands to reason that a bigger piece of software has the potential of being more buggy than a small piece of software. The defect potential is the concept of the number of defects that are likely to be in a piece of software before we start any defect detection/avoidance/removal measures.

Typical defect potentials are 4-5 defects per CFP. These defects will vary in severity and in their root source. Typically as follows:

Defect Source |

Defect Potentials per FP (comparable with CFP) |

|---|---|

| Requirements | 1 |

| Design | 1.25 |

| Code | 1.75 |

| Documents | 0.6 |

| Bad fixes | 0.4 |

| Total | 5 |

Source: Capers Jones, The Economics of Software Quality, 2011.

What this means is that there are likely to be 5 defects per CFP unless we perform quality improvement measures to find and fix defects from each of these areas.

Awareness of defect potential helps to answer these questions:

- How many defects we need to find, to achieve reasonable quality?

- How many defects are likely to be outstanding?

- How many more tests we need to run?

- Are we ready to go live?

Defect Removal Efficiency

Quality of a software product is generally obsreved as the number of defects exposed in production. The number of “new” defects diminishes over the first 3 months, so we can say that the defect removal efficiency is determined by the number of defects found in the first 3 months of use as a percentage of the defect potentials, which is based on the amount of functionality delivered.

Defect Source |

Defect Potentials per FP (comparable with CFP) |

Defect Removal Efficiency |

Delivered Defects per CFP/p> |

|---|---|---|---|

| Requirements | 1 | 77% | 0.23 |

| Design | 1.25 | 85% | 0.19 |

| Code | 1.75 | 95% | 0.09 |

| Documents | 0.6 | 80% | 0.12 |

| Bad fixes | 0.4 | 70% | 0.12 |

| Total | 5 | 85% | 0.75 |

Software Benchmarking – Schedules

The schedule, or time taken to deliver software is a direct consequence of the productivity of the team(s) working on the software. We have looked at developer productivity in terms of team output per sprint at around 70 CFP per two week sprint, or 140 per month. This gives us a clear guide towards answering the question. “When will it be ready?”

Rule of thumb: For small projects of less than 1000 CFP: a team will typically deliver 140 CFP per month.

Rule of thumb: For large projects of over 1000 CFP+, productivity diminishes with size: the number of months to deliver = CFP ^ 0.4

Note that delivering functionality with poor quality will slow down the project overall. Only with high quality can the fastest schedules be achieved.

Tip: If a CFP has been delivered but a defect is discovered in a subsequent sprint, you don’t count it as delivered.

Benchmarking Delivered Value

If a company spends $1000 on delivering 1 CFP of new functionality. It would expect the overall business value of that functionality to exceed the cost of $1000 over a short term and long term. The value per CFP will vary, but companies should be thinking of 1-3 year value return in terms of many times the cost per CFP. The 3 year commercial benefit per CFP is a useful metric to track, and can be benchmarked.

Total Cost of Ownership

Beyond the initial $1000 build cost of a CFP, companies should factor in the total cost of ownership over the life time of that software. For example, in the first two years after the initial build there is likely to be considerable improvements made, circa 20% in the first year and a further 15% in the second thereafter typically 8% per year. You can establish your own benchmarks internally for TCO of software applications in your portfolio, this data will help with decisions about system replacement plans.

Rule of thumb: the 5 year total TCO is typically 2-3x the initial cost.

Benchmarking Scope Change

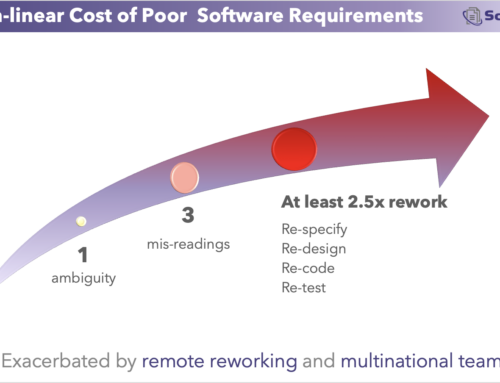

During a project, new requirements will arise or become apparent. Whilst some of this is knowable up front, some is not. Those organisations who spend adequately on requirements work (15% or more for custom development, higher for package implementations such as ERPs) will not only achieve shorter timelines, but will also experience less scope change during the project. Capers Jones

Rule of thumb: 1-2% scope change per month during a project.

Rule of thumb: 8% per annum scope change after implementation.

Benchmarking Technical Debt

Technical debt is the rework that arises from requirements emerging that were not known when the code was first written. To quantify and benchmark technical debt, one must first carefully classify the rework that is caused by:

- Mistakes in code (not technical debt)

- Shortcuts made for rapid deployment (not technical debt in the original concept but commonly thought to be so)

- Rework because insufficient requirements work was done (knowable unknowns arising later). This is avoidable technical debt.

- Rework because requirements emerged later that were not knowable earlier. (This is the unavoidable genuine technical debt.)

Most organisations are inconsistent with the above classifications and so will find benchmarking technical debt difficult.

Total Cost of Ownership

Beyond the initial $1000 build cost, companies should factor in the total cost of ownership over the life time of that software. In the first two years after the initial build there is likely to be considerable improvements made, circa 20% in the first year and a further 15% in the second and 8% p.a. thereafter. Beyond that there is the maintenance of the software per annum, either to keep it working on an-ever changing infrastructure or to fix bugs that appear. Capers Jones

Rule of thumb: the 5 year cost is typically 3x the initial cost.

Other Benchmarks for Software work

Engineers per customer/user

This number is often cited by consumer Saas products such as Facebook and Twitter. More frequently it is expressed as thousands of users per engineer. This is a useful metric for such organisations.

Revenue per DevOps Engineer

In software intensive businesses, where technical staff are a primary cost of the business, tracking overall revenue per DevOps engineer is a broad indicator of technical efficiency.

DORA Metrics

Deployment Frequency

Is an measure of the number of times new code (of any quantity) is released to production. It is a useful-one off indicator of the repeatability of the dev/test cycle. A deployment frequency should be measured in hours or days, not weeks.

Mean Time to Restore

Mean Time to Restore tracks the elapsed time it takes to restore service after a failure in production. This is an indicator of the ability of a team to recover from a mistake. It should be measured in minutes or hours, not days.

Lead Time for Changes

Lead Time for Changes tracks the time code takes to go from committed to successfully running in production. This takes no consideration of the magnitude, value nor complexity of the change. It is more about the deployment efficiency rather than the development efficiency.

Change Failure Rate

The change failure rate is an indicator of quality of deployed code.

Advantages of DORA metrics

They are easy to track

They are focussed on DevOps activities.

Disadvantages of DORA metrics

They focus on change rates regardless of size or customer value.

They are mostly unsuitable for project planning, sizing and tracking

They are only suitable for benchmarking DevOps or DevSecOps activities,

Harmful Benchmarks for Software work

Organisations may often mistakenly capture metrics that can lead to uniintented consequences, these should be used with great caution or avoided altogether and furthermore should not be used as a basis for benchmarking.

Lines of codes produced per engineer

This can cause developers to write verbose code, rather than efficient or re-used code. Instead use CFP produced per engineer

- Defects produced per month per engineer

- The number of defects produced should be considered in the context of the output. A developer that produces 1 defect per month and just 1 CFP, is less capable than one who produces 2 defects per month in 10 CFP. Instead use defects per CFP produced per engineer.

- Cost to detect a defect

- The higher the quality of the software, the more costly it is to find a defect. A high cost per defect discovery can mean that the software is already high value.

Internal Benchmarking Tips

- Disclose the names of projects on internal comparative benchmarks

- Show component metrics not just the overall values.

- Include non-coding activities.

- Include human aspects.

- Use functional size metrics.

- Do not use the data to set abstract targets.

Summarised from Capers Jones presentation on Benchmarking