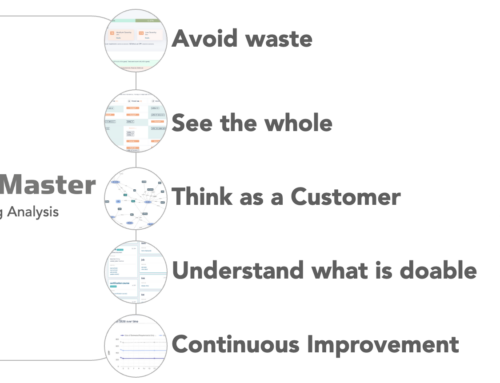

Start user story testing

On most software activity the user stories are a terse reminder of the conversations between product owner, developer and tester. Although user stories are very short, the form is commonly misused and this leads to ambiguity, unnecessary discussion and rework. In this article we set how to test user stories so that you can ensure that your user stories are high quality and will reduce rework and shorten timelines.

I have enjoyed considerable debate online about the validity of even thinking about user story quality. If you accept that clear user stories are helpful to a project, then the concept of user story testing should make sense to you.

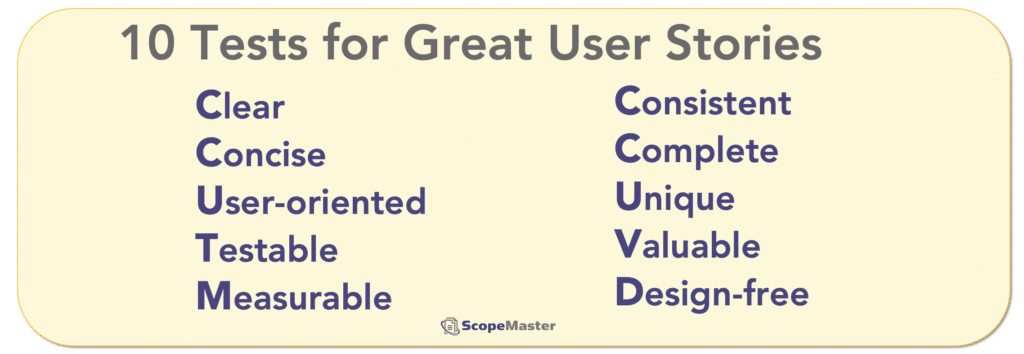

Learn these ten tests for writing great user stories.

1. Clear

Your user stories must be clear and unambiguous. Product owner, developer and tester should all have a common understanding of what is to be delivered from the text of the story. As you write your stories, assume that if they can potentially be misinterpreted, they will. Also, make sure that your stories include all necessary functionality (excluding navigation).

Tip: Focus on the functional intent of the user story, e.g. “As registered_user I can update my profile”

2. Concise

Keep them short. Stories do not need to be lengthy to convey the essential functional meaning. One or more short sentences may be sufficient. Remember that the acceptance criteria are supplementary to the story.

Tip: Avoid description about navigation, implementation details, acceptance criteria, and object attributes.

Tip: Avoid implementation details.

Tip: Separate the acceptance criteria from the core user story.

3. User-oriented

A story should be written from the user’s perspective. The typical agile recommendation is the format:

As a User_type I want to perform_something so that reason

In some cases, the user_type may be another piece of software, or perhaps even a device interacting with the software, such as a sensor.

Tip: Never write your stories from the developer’s perspective.

Tip: Avoid the general term user, instead use rich, consistent labels for user type or role, such as registered_user or unauthenticated_visitor

4. Testable

A story can be testable if it contains clear statement(s) of functionality. Use phrases that infer data movement, storage and retrieval. Examples of testable stories include phrases such as “update profile”, “display sales report“, “send email”. Writing your stories this way will ensure that the core functional intent is clear. This provides the foundation from which test scenarios can be generated. The full test suite will usually depend on supplementary detailed acceptance criteria.

Tip: The detailed acceptance criteria are supplementary to the core user story, do not embed acceptance criteria within the text of the user story.

5. Measurable

I refer here to the size measurability, specifically using the COSMIC function point (CFP) as the basis for sizing. It’s a mature, second generation, ISO standard, suitable for all types of software work. User stories can only be measured if they contain clear expression(s) of all the data movements that will be needed and measured. Measurability significantly helps with both planning and quality assurance. Functional size is not the only measurable attribute of a user story, however it is one of the most important (because it relates very closely to the effort to build it).

Tip: Failure to measure size adds uncertainty to your software work.

Tip: Learn about the COSMIC sizing methodology from this guide.

6. Consistent

Use consistent words for both user_types and object_types across a set of user stories. Consistent naming of will reduce confusion, defects, rework and waste. Complex systems and jargon-heavy environments tend to be prone to having the same user or object being given different terms by team members.

7. Complete

Missing requirements is one of the biggest causes of software project failures. Most projects grow in size as additional needs become apparent. This increase in scope leads to more work, more rework, extended timelines, budget overruns and in some cases compete project failure. Although the Agile approach discourages excessive up-front work, some up-front scoping work is essential. Look carefully for the missing requirements when you write your user stories.

8. Unique

All requirements should be unique. Duplicated requirements is a problem that tends to be more prevalent in larger projects.

9. Valuable

All user stories should be valuable to the “business”. It is appropriate to challenge the value and importance of each user story, so that only the most important functionality is delivered. If a user story cannot be traced back to delivering a measurable business outcome, it may be not valuable and perhaps should be de-scoped. The actual financial value of the story may be hard to measure, using functional size (CFP) as a basis for value (especially for earned value measurement EVM).

10. Design-free

User stories should not refer to the technology used to deliver them. This level of detail can be included as supplementary to the user story to help provide context about the “how” it should be delivered. This is particularly suitable for non functional aspects of how the functionality will be achieved.

Recommendation

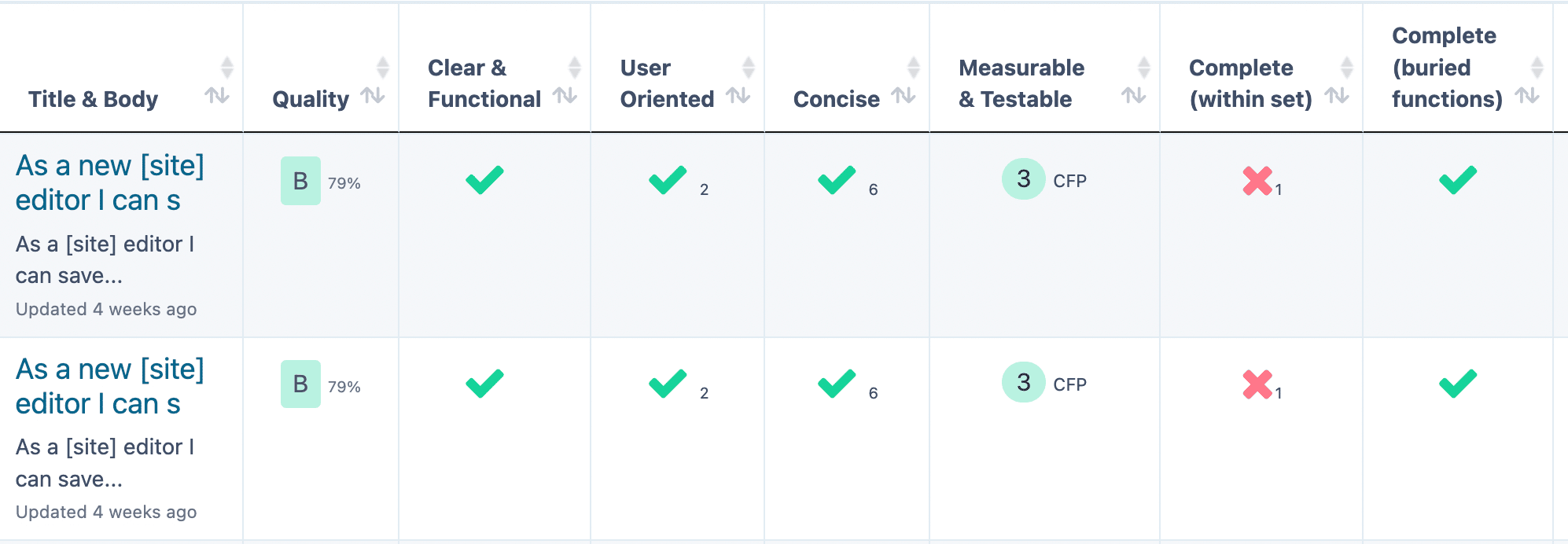

Let ScopeMaster test your user stories for you, just import and press the green button, it’s intelligent language analysis will assess the quality and make recommendations to improve your user stories.

Conclusion

As we move towards more remote working our dependence on the written word in software development increases. Spend time to get the words right. Good quality user stories are the foundation of quality software. You should test user stories before you start coding, to avoid time wasting.

Each of these 10 tests represent a quality attribute of a good user story. You can use this as a checklist to run against each user story that you write. Testing user stories will pay significant dividends by reducing rework, reducing scope creep, reducing scope volatility. And if you want to do it faster, try ScopeMaster, it does the heavy lifting of user story testing.